Nvidia Leads Generative AI Chips But There’s More Than Meets the Silicon

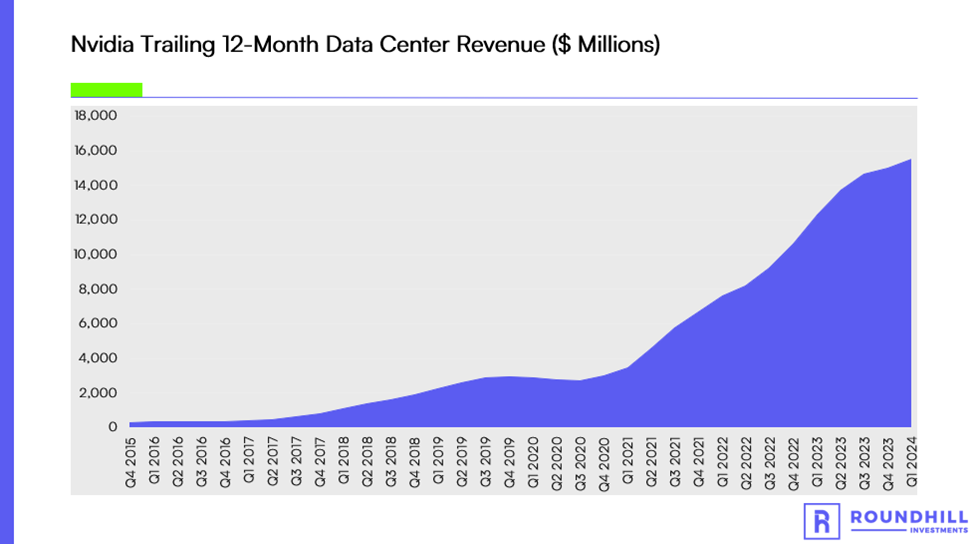

Nvidia is far and away the leader in advanced semiconductors for artificial intelligence, accounting for the bulk of industry revenue, according to Omdia. Nvidia estimates a longer-term addressable market for data center graphics processing units (GPUs) and systems of $300 billion, well north of its trailing 12-month data center revenue of $15.5 billion.

With the explosion of generative AI tools and technologies requiring even more processing power to train and implement large language models, what’s the outlook for Nvidia’s data center GPU sales? Can other companies carve out a slice of this rapidly expanding market?

Nvidia’s Leadership in $300 Billion Market

Nvidia is the clear leader in data center accelerated compute systems, with Omdia estimating its share of AI chips at 80%. Nvidia’s GPUs are better-suited to handle the types of calculations done by AI models as opposed to CPUs, which has enabled it to garner this position. Rivals such as AMD and Intel compete with both CPUs and GPUs but have not had the same success as Nvidia.

Specific investments described herein do not represent all investment decisions made by Roundhill Investments. The reader should not assume that investment decisions identified and discussed were or will be profitable. Specific investment advice references provided herein are for illustrative purposes only and are not necessarily representative of investments that will be made in the future.

Source: Nvidia Company Filings, Bloomberg, May 10, 2023

Source: Nvidia Company Filings, Bloomberg, May 10, 2023

Aside from the leading GPU technology, Nvidia has also built a trove of software and has a large developer ecosystem trained in its products. This helps keep them as the ecosystem of choice for AI researchers and operators even as others purport to release chips that on paper might be more powerful than those from Nvidia.

Nvidia also has an edge in providing complete AI systems for these customers from its acquisition of networking chip-maker Mellanox. Leveraging Mellanox’s technology, Nvidia can more-efficiently package together several GPUs into a single system designed for these large-scale AI customers. Its DGX A100 system combines eight A100 GPUs with 500 GB-per-second switches.

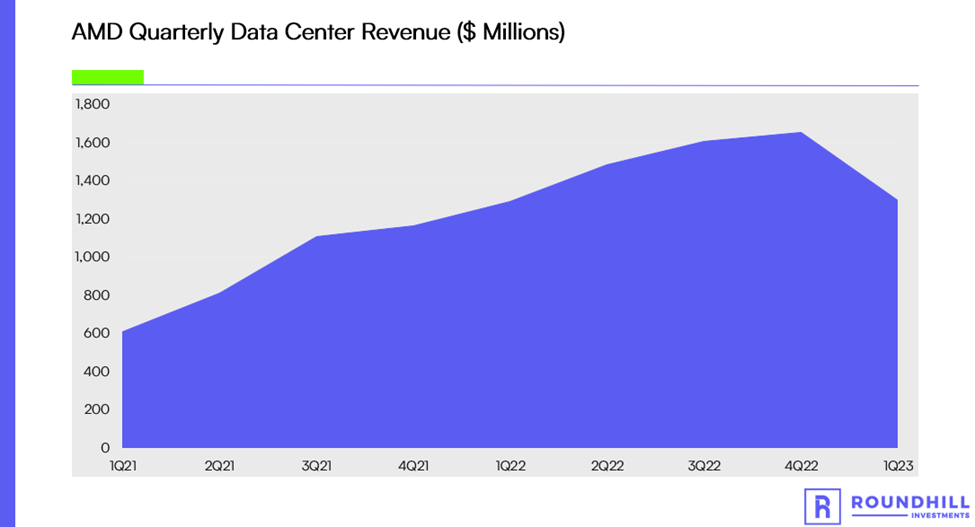

AMD May Get Boost From Microsoft

AMD’s efforts to expand into data center GPUs may get a boost from a potential partnership with Microsoft. AMD has played second-fiddle to Nvidia in GPUs for a long time, competing head-to-head for consumer-grade gaming graphics cards but being unable to penetrate the more-premium data center market to the same degree.

Source: AMD Company Filings, Bloomberg, May 10, 2023

Source: AMD Company Filings, Bloomberg, May 10, 2023

Bloomberg reported on May 4, 2023 that Microsoft is working with AMD to build an alternative to Nvidia’s chips, though the report says that Microsoft plans to keep working with Nvidia as well. The large hyperscale cloud companies like Microsoft, Google and Amazon have designed their own chips for various uses for years and we expect them to do so for AI workloads, especially for training large language models internally.

Still, if Nvidia is correct and the data center chip market reaches $300 billion longer-term, there’s room for everyone to grab a big slice of the market. If Nvidia claims half this market, it means its data center revenue would increase nearly 10x while peers would still be looking to split $150 billion of revenue.

Others Play Catch Up But Software Lags

There’s a long list of companies who believe their chips can power future GAI use cases such as Qualcomm or Intel. There’s also many companies and startups designing AI-specific chips and some of these claim that they outperform Nvidia and others in AI workloads.

The big hurdle for these companies will be building out the software suite to match Nvidia’s and building a developer ecosystem trained in its software. This is a massive hurdle for startups to fill and makes it unlikely for them to usurp Nvidia anytime soon, even if the large market opportunity leaves room for many to grow into it.

The same is true for custom chips made by the large cloud companies like Amazon and Google. Google, for example, created its own custom Tensor Processing Units (TPUs) designed specifically for AI workloads. Google uses these internally to train AI models and makes them available to customers to train their own models via Google Cloud, but most customers still opt for cloud GPU solutions.

Conclusion

Nvidia is the leader in datacenter GPUs used for AI workloads and is likely to maintain its leadership in the market, we believe. But the rapid growth in this market and the need for accelerated compute to train and implement models means that others will also be able to latch onto this burgeoning growth opportunity.

This document does not constitute advice or a recommendation or offer to sell or a solicitation to deal in any security or financial product. It is provided for information purposes only and on the understanding that the recipient has sufficient knowledge and experience to be able to understand and make their own evaluation of the proposals and services described herein, any risks associated therewith and any related legal, tax, accounting or other material considerations. To the extent that the reader has any questions regarding the applicability of any specific issue discussed above to their specific portfolio or situation, prospective investors are encouraged to contact Roundhill Investments or consult with the professional advisor of their choosing.

Investing involves risk, including possible loss of principal. Artificial Intelligence (AI) Companies and other companies that rely heavily on technology are particularly vulnerable to research and development costs, substantial capital requirements, product and services obsolescence, government regulation, and domestic and international competition, including competition from foreign competitors with lower production costs. Stocks of such companies, especially smaller, less-seasoned companies, may be more volatile than the overall market. AI Companies may face dramatic and unpredictable changes in growth rates. AI Companies may be targets of hacking and theft of proprietary or consumer information or disruptions in service, which could have a material adverse effect on their businesses.